Setps to upgrade database from 10gR2 RAC to 11gR2 RAC

This Document shows the step by step of upgrading database from

10gR2 RAC to 11gR2 RAC. I have chosen the below upgrade path which will allow

me to upgrade 10gR2 Clusterware and ASM to 11gR2 Grid infrastructure. In this

upgrade Path, the original 10gR2 CRS installation was made hidden as if there

is no CRS existed. Then, the fresh installation of 11gR2 Grid Infrastructure

was taken place and 10gR2 ASM diskgroups were moved and mounted using the new

11gR2 Grid HOME. At this stage, the original 10gR2 RAC database was managed by

11gR2 Grid HOME. At the end, the 11gR2 RAC database home was installed and the

original 10gR2 RAC database was upgraded to 11gR2 RAC manually.

1. Backup /etc/inittab , /var/opt/oracle , /etc/init.d

2. /oraolap_db

Backup inittab file:-------

mkdir -p /oraolap_crs/backup_crs_04may13

cd /oraolap_crs/backup_crs_04may13

cp /etc/inittab /oraolap_crs/backup_crs_04may13/etc_inittab

cp /etc/inittab /etc/inittab_bkp_04may13

****************************************************************************

backup "/var/opt/oracle" directory:-------------

mkdir -p /oraolap_crs/backup_crs_04may13/var_opt_oracle

mkdir -p /var/opt/oracle_bkp_04may13

cp -r /var/opt/oracle /var/opt/oracle_bkp_04may13 ---- frm root

mv /var/opt/oracle /oraolap_crs/backup_crs_04may13/var_opt_oracle

****************************************************************************

Backup /etc/init.d

mkdir -p /oraolap_crs/backup_crs_04may13/etc_init_d

cp /etc/init.d/init* /etc/init.d/init_d_bkp_04may13

cp /etc/init.d/init* /oraolap_crs/backup_crs_04may13/etc_init_d

mv /etc/init.d/init* /oraolap_crs/backup_crs_04may13/etc_init_d

dd=

The Upgrade Process is composed of below Stages:

• Pre-Upgrade Tasks

• Hide the 10gR2 Clusterware installation.

• Install 11gR2 Grid Infrastructure.

• Move 10gR2 ASM Diskgroups to 11gR2 Grid Infrastructure.

• Register10gR2 RAC database and services to 11gR2 Grid Infrastructure.

• Install 11gR2 RAC for database home.

• Manually upgrade original 10gR2 RAC database to 11gR2 RAC.

Pre-Upgrade tasks:

• Install/Upgrade RPMs required for 11gR2 RAC Installation

• Add SCAN VIPs to the DNS

• Setup of Network Time Protocol

• Start the nscd on all the RAC nodes

• Create 3 ASM Disks for 11gR2 OCR and Voting Disks.

• Backing up 10gR2 existing HOMEs and database

Minimum Required RPMs for 11gR2 RAC on OEL 5.5 (All the 3 RAC Nodes):

Below command verifies whether the specified rpms are installed or not. Any missing rpms can be installed from the OEL Media Pack

rpm -q binutils compat-libstdc++-33 elfutils-libelf elfutils-libelf-devel elfutils-libelf-devel-static \

gcc gcc-c++ glibc glibc-common glibc-devel glibc-headers kernel-headers ksh libaio libaio-devel \

libgcc libgomp libstdc++ libstdc++-devel make numactl-devel sysstat unixODBC unixODBC-devel

I had to install below RPM.

numactl-devel Located on the 3rd CD of OEL 5.5 Media pack.

[root@node1 ~]# rpm -ivh numactl-devel-0.9.8-11.el5.i386.rpm

warning: numactl-devel-0.9.8-11.el5.i386.rpm: Header V3 DSA signature: NOKEY, key ID 1e5e0159

Preparing... ########################################### [100%]

1:numactl-devel ########################################### [100%]

[root@node1 ~]#

I had to upgrade the cvuqdisk RPM by removing and installing the same with higher version. This step is also taken care by rootupgrade.sh script.

cvuqdisk Available on Grid Infrastructure Media (under rpm folder)

rpm -e cvuqdisk

export CVUQDISK_GRP=oinstall

echo $CVUQDISK_GRP

rpm -ivh cvuqdisk-1.0.7-1.rpm

Add SCAN VIPs to DNS:

SCAN VIPS to configure in DNS which resolves to lab-scan.hingu.net:

192.168.2.151

192.168.2.152

192.168.2.153

HERE is the existing DNS setup. In that setup, the below two files were modified with the entry in RED to add these SCAN VIPs into the DNS.

/var/named/chroot/var/named/hingu.net.zone

/var/named/chroot/var/named/2.168.192.in-addr.arpa.zone

/var/named/chroot/var/named/hingu.net.zone

$TTL 1d

hingu.net. IN SOA lab-dns.hingu.net. root.hingu.net. (

100 ; se = serial number

8h ; ref = refresh

5m ; ret = update retry

3w ; ex = expiry

3h ; min = minimum

)

IN NS lab-dns.hingu.net.

; DNS server

lab-dns IN A 192.168.2.200

; RAC Nodes Public name

node1 IN A 192.168.2.1

node2 IN A 192.168.2.2

node3 IN A 192.168.2.3

; RAC Nodes Public VIPs

node1-vip IN A 192.168.2.51

node2-vip IN A 192.168.2.52

node3-vip IN A 192.168.2.53

; 3 SCAN VIPs

lab-scan IN A 192.168.2.151

lab-scan IN A 192.168.2.152

lab-scan IN A 192.168.2.153

; Storage Network

nas-server IN A 192.168.1.101

node1-nas IN A 192.168.1.1

node2-nas IN A 192.168.1.2

node3-nas IN A 192.168.1.3

/var/named/chroot/var/named/2.168.192.in-addr.arpa.zone

$TTL 1d

@ IN SOA lab-dns.hingu.net. root.hingu.net. (

100 ; se = serial number

8h ; ref = refresh

5m ; ret = update retry

3w ; ex = expiry

3h ; min = minimum

)

IN NS lab-dns.hingu.net.

; DNS machine name in reverse

200 IN PTR lab-dns.hingu.net.

; RAC Nodes Public Name in Reverse

1 IN PTR node1.hingu.net.

2 IN PTR node2.hingu.net.

3 IN PTR node3.hingu.net.

; RAC Nodes Public VIPs in Reverse

51 IN PTR node1-vip.hingu.net.

52 IN PTR node2-vip.hingu.net.

53 IN PTR node3-vip.hingu.net.

; RAC Nodes SCAN VIPs in Reverse

151 IN PTR lab-scan.hingu.net.

152 IN PTR lab-scan.hingu.net.

153 IN PTR lab-scan.hingu.net.

Restart the DNS Service (named):

service named restart

NOTE: nslookup for lab-scan should return names in random order every time.

Network Time Protocol Setting (On all the RAC Nodes):

Oracle Time Synchronization Service is chosen to be used over the Linux system provided ntpd. So, ntpd needs to be deactivated and deinstalled to avoid any possibility of it being conflicted with the Oracle’s Cluster Time Sync Service (ctss).

# /sbin/service ntpd stop

# chkconfig ntpd off

# mv /etc/ntp.conf /etc/ntp.conf.org

Also remove the following file:

/var/run/ntpd.pid

Network Service Cache Daemon (all the RAC nodes)

The Network Service Cache Daemon was started on all the RAC

nodes.

Service nscd start

On the rest of the Nodes:

oracleasm scandisks

Steps I followed to take the Backup of ORACLE_HOMEs before the

upgrade:

On node1:

mkdir backup

cd backup

dd if=/dev/dev/raw1 of=ocr_disk_10gr2.bkp

dd if=/dev/dev/raw3 of=voting_disk_10gr2.b

tar cvf node1_crs_10gr2.tar /u01/app/oracle/crs/*

tar cvf node1_asm_10gr2.tar /u01/app/oracle/asm/*

tar cvf node1_db_10gr2.tar /u01/app/oracle/db/*

tar cvf node1_etc_oracle /etc/oracle/*

cp /etc/inittab etc_inittab

mkdir etc_init_d

cd etc_init_d

cp /etc/init.d/init* .

On node2:

mkdir backup

cd backup

tar cvf node2_crs_10gr2.tar /u01/app/oracle/crs/*

tar cvf node2_asm_10gr2.tar /u01/app/oracle/asm/*

tar cvf node2_db_10gr2.tar /u01/app/oracle/db/*

tar cvf node2_etc_oracle /etc/oracle/*

cp /etc/inittab etc_inittab

mkdir etc_init_d

cd etc_init_d

cp /etc/init.d/init* .

On node3:

mkdir backup

cd backup

tar cvf node3_crs_10gr2.tar /u01/app/oracle/crs/*

tar cvf node3_asm_10gr2.tar /u01/app/oracle/asm/*

tar cvf node3_db_10gr2.tar /u01/app/oracle/db/*

tar cvf node3_etc_oracle /etc/oracle/*

cp /etc/inittab etc_inittab

mkdir etc_init_d

cd etc_init_d

cp /etc/init.d/init* .

RMAN full database backup was taken.

RMAN full database backup was taken.

bash-3.2$ cat /etc/resolv.conf

options attempts: 2

options timeout: 1

search uninor.local

nameserver 172.30.70.12

nameserver 172.30.70.14

bash-3.2$ cat /etc/nsswitch.conf

#

# Copyright 2006 Sun Microsystems, Inc. All rights reserved.

# Use is subject to license terms.

#

# ident "@(#)nsswitch.files 1.14

06/05/03 SMI"

#

# /etc/nsswitch.files:

#

# An example file that could be copied over to /etc/nsswitch.conf;

it

# does not use any naming service.

#

# "hosts:" and "services:" in this file are

used only if the

# /etc/netconfig file has a "-" for nametoaddr_libs of

"inet" transports.

passwd: files

group: files

hosts: files dns-----

ipnodes: files

networks: files

Put entry in NTP:

bash-3.2$ cat /etc/inet/ntp.conf

# ident "@(#)ntp.client 1.3 00/07/17 SMI"

#

# /etc/inet/ntp.client

#

# An example file that could be copied over to /etc/inet/ntp.conf; it

# provides a configuration for a host that passively waits for a server

# to provide NTP packets on the ntp multicast net.

#

#server 172.30.254.113

server 172.30.254.114

server 172.30.254.115

#multicastclient 224.0.1.1

slewalways yes

disable pllHide the 10gR2 CRS:

In order to install 11gR2 GI, it is required to hide the existing 10gR2 CRS so that 11gR2 GI is installed without any conflict with 10gR2 CRS.

Shutdown CRS on all the RAC nodes

crsctl stop crs

renamed these files/Directories

mv /etc/oracle /etc/oracle_bkp

mkdir /etc/init.d/bkp

mv /etc/init.d/init* /etc/init.d/bkp

removed below lines from the /etc/inittab (the inittab was already backed up in Pre-Upgrade tasks as well)

h1:35:respawn:/etc/init.d/init.evmd run >/dev/null 2>&1

removed the network socket files:

rm -rf /tmp/.oracle

rm -rf /var/tmp/.oracle

rebooted all the RAC nodes at this stage

reboot

Install 11gR2 Grid Infrastructure:

Grid Infrastructure installation process:

Installation Option:

Install and Configure Grid Infrastructure for a Cluster

Installation Type:

Advanced Installation

Product Language:

English

Grid Plug and Play:

Cluster Name: lab

SCAN Name: lab-scan.hingu.net(nsswitch.conf)

SCAN Port: 1525

Configure GNS: Unchecked

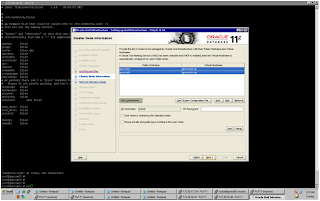

Cluster Node Information:

Entered the Hostname and VIP names of the Cluster Nodes.

Checked the SSH connectivity

Network Interface Usage:

OUI picked up all the interfaces correctly. I did not have to make any changes here.

Storage Option:

Automatic Storage Management (ASM)

Create ASM Disk Group:

Disk Group Name: GIS_FILES

Redundancy: Normal

Candidate Disks: ORCL:GISDSK01, ORCL:GISDSK02, ORCL:GISDSK03

ASM Password:

Use Same Password for these accounts. (Ignored password warnings.)

Failure Isolation:

Do not use Intelligent Platform Management Interface (IPMI)

Operating System Groups:

ASM Database Administrator (OSDBA) Group: dba

ASM Instance Administrator Operator (OSOPER) Group: oinstall

ASM Instance Administrator (OSASM) Group: oinstall

Installation Location:

Oracle Base: /u01/app/oracle

Software Location: /u01/app/grid11201

Create Inventory:

Inventory Directory: /u01/app/oraInventory

Prerequisite Checks:

No issue/Errors

Summary Screen:

Verified the information here and pressed “Finish” to start installation.

At the End of the installation, the root.sh script needed to be executed as root user.

MAKE SURE TO RUN THE SCRIPTS ONE BY ONE ON EACH SERVER.

EXAMPLE:- /oraolap_grid11g/oraInventory/orainstRoot.sh----on node 1 then,/oraolap_grid11g/oraInventory/orainstRoot.sh---- on node 2 then,

/oraolap_grid11g/GRID/root.sh--- on node 1 then,

/oraolap_grid11g/GRID/root.sh--- on node 2

/u01/app/grid11201/root.sh

Verified that the status of the installation using below set of commands.

crsctl check cluster –all

crs_stat –t –v

crsctl check ctss

The GSD and OC4J resources are by default disabled. Enabled GSD them as below.

srvctl enable nodeapps –g

srvctl start nodeapps –n node1

srvctl start nodeapps –n node2

srvctl start nodeapps –n node3

srvctl enable oc4j

srvctl start oc4j

Invoked netca from 11gR2 Grid Infrastructure Home to reconfigure the listener “LISTENER”

/u01/app/oracle/grid11201/bin/netca

Remove default listener and add new one.

MAKE SURE TO RUN THE SCRIPTS ONE BY ONE ON EACH SERVER.

EXAMPLE:-

/oraolap_grid11g/oraInventory/orainstRoot.sh----on node 1 then,

/oraolap_grid11g/oraInventory/orainstRoot.sh---- on node 2 then,

/oraolap_grid11g/GRID/root.sh--- on node 1 then,

/oraolap_grid11g/GRID/root.sh--- on node 2

root@gnncrm03 #

/oraolap_grid11g/oraInventory/orainstRoot.sh

Changing permissions of

/oraolap_grid11g/oraInventory.

Adding read,write permissions for

group.

Removing read,write,execute

permissions for world.

Changing groupname of

/oraolap_grid11g/oraInventory to oinstall.

The execution of the script is

complete.

root@gnncrm03 # May 5 06:32:17 gnncrm03 sendmail[14938]: [ID

801593 mail.alert] r3U64rv6004939: queueup: cannot create ./tfr3U64rv6004939,

euid=25: File exists

root@gnncrm03 #

/oraolap_grid11g/GRID/root.sh

Performing root user operation

for Oracle 11g

The following environment

variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /oraolap_grid11g/GRID

Enter the full pathname of the

local bin directory: [/usr/local/bin]: May

5 06:32:55 gnncrm03 sendmail[11204]: [ID 801593 mail.alert]

r3U3YrvM001067: queueup: cannot create ./tfr3U3YrvM001067, euid=25: File exists

The file "dbhome"

already exists in /usr/local/bin.

Overwrite it? (y/n) [n]:

The file "oraenv"

already exists in /usr/local/bin.

Overwrite it? (y/n) [n]:

The file "coraenv"

already exists in /usr/local/bin.

Overwrite it? (y/n) [n]:

Creating /var/opt/oracle/oratab

file...

Entries will be added to the /var/opt/oracle/oratab

file as needed by

Database Configuration Assistant

when a database is created

Finished running generic part of

root script.

Now product-specific root actions

will be performed.

Using configuration parameter

file: /oraolap_grid11g/GRID/crs/install/crsconfig_params

Creating trace directory

User ignored Prerequisites during

installation

May 5 06:33:25 gnncrm03 sendmail[4235]: [ID

801593 mail.alert] r3U3nrvM013338: queueup: cannot create ./tfr3U3nrvM013338,

euid=25: File exists

OLR initialization - successful

root wallet

root wallet cert

root cert export

peer wallet

profile reader wallet

pa wallet

peer wallet keys

pa wallet keys

peer cert request

pa cert request

peer cert

pa cert

peer root cert TP

profile reader root cert TP

pa root cert TP

peer pa cert TP

pa peer cert TP

profile reader pa cert TP

profile reader peer cert TP

May 5 06:34:11 gnncrm03 sendmail[631]: [ID 801593

mail.alert] r3U0Yrv9002111: queueup: cannot create ./tfr3U0Yrv9002111, euid=25:

File exists

peer user cert

pa user cert

Adding Clusterware entries to

inittab

CRS-2672: Attempting to start

'ora.mdnsd' on 'gnncrm03'

CRS-2676: Start of 'ora.mdnsd' on

'gnncrm03' succeeded

CRS-2672: Attempting to start

'ora.gpnpd' on 'gnncrm03'

CRS-2676: Start of 'ora.gpnpd' on

'gnncrm03' succeeded

CRS-2672: Attempting to start

'ora.cssdmonitor' on 'gnncrm03'

CRS-2672: Attempting to start

'ora.gipcd' on 'gnncrm03'

CRS-2676: Start of

'ora.cssdmonitor' on 'gnncrm03' succeeded

CRS-2676: Start of 'ora.gipcd' on

'gnncrm03' succeeded

CRS-2672: Attempting to start

'ora.cssd' on 'gnncrm03'

CRS-2672: Attempting to start

'ora.diskmon' on 'gnncrm03'

CRS-2676: Start of 'ora.diskmon'

on 'gnncrm03' succeeded

CRS-2676: Start of 'ora.cssd' on

'gnncrm03' succeeded

ASM created and started

successfully.

Disk Group OCR_VOTE_DISK created

successfully.

clscfg: -install mode specified

Successfully accumulated

necessary OCR keys.

Creating OCR keys for user

'root', privgrp 'root'..

Operation successful.

CRS-4256: Updating the profile

Successful addition of voting

disk 49d0ab0acd594fb3bf576a95628c26dd.

Successful addition of voting

disk 7592353209f04feebf8f8a26edbda59b.

Successful addition of voting

disk 4072c820eed94ffbbf2770004ac5e8a7.

Successfully replaced voting disk

group with +OCR_VOTE_DISK.

CRS-4256: Updating the profile

CRS-4266: Voting file(s)

successfully replaced

## STATE

File Universal Id

File Name Disk group

-- -----

-----------------

--------- ---------

1. ONLINE

49d0ab0acd594fb3bf576a95628c26dd (/dev/rdsk/quorum_disk01)

[OCR_VOTE_DISK]

2. ONLINE

7592353209f04feebf8f8a26edbda59b (/dev/rdsk/quorum_disk02)

[OCR_VOTE_DISK]

3. ONLINE

4072c820eed94ffbbf2770004ac5e8a7 (/dev/rdsk/quorum_disk03)

[OCR_VOTE_DISK]

Located 3 voting disk(s).

May 5 06:40:04 gnncrm03 sendmail[17865]: [ID

801593 mail.alert] r3U2YrvS011696: queueup: cannot create ./tfr3U2YrvS011696,

euid=25: File exists

CRS-2672: Attempting to start

'ora.asm' on 'gnncrm03'

CRS-2676: Start of 'ora.asm' on

'gnncrm03' succeeded

CRS-2672: Attempting to start

'ora.OCR_VOTE_DISK.dg' on 'gnncrm03'

CRS-2676: Start of

'ora.OCR_VOTE_DISK.dg' on 'gnncrm03' succeeded

May 5 06:42:59 gnncrm03 sendmail[14938]: [ID

801593 mail.alert] r3U0Jrv4019370: queueup: cannot create ./tfr3U0Jrv4019370,

euid=25: File exists

May 5 06:44:35 gnncrm03 sendmail[11204]: [ID

801593 mail.alert] r3TN4rvU017762: queueup: cannot create ./tfr3TN4rvU017762,

euid=25: File exists

CRS-2672: Attempting to start

'ora.registry.acfs' on 'gnncrm03'

CRS-2676: Start of 'ora.registry.acfs'

on 'gnncrm03' succeeded

Configure Oracle Grid

Infrastructure for a Cluster ... succeeded

root@gnncrm03 # May 5 06:45:16 gnncrm03 sendmail[631]: [ID 801593

mail.alert] r3TJ4rvg025402: queueup: cannot create ./tfr3TJ4rvg025402, euid=25:

File exists

May 5 06:45:26 gnncrm03 sendmail[4235]: [ID

801593 mail.alert] r3TNnrwe025243: queueup: cannot create ./tfr3TNnrwe025243,

euid=25: File exists

After the successful completion of this script, the 11g R2 High Availability Service (CRS, CSS and EVMD) started up and running.

Verified that the status of the installation using below set of commands.

crsctl check cluster –all

crs_stat –t –v

crsctl check ctss

The GSD and OC4J resources are by default disabled. Enabled GSD them as below.

srvctl enable nodeapps –g

srvctl start nodeapps –n node1

srvctl start nodeapps –n node2

srvctl start nodeapps –n node3

srvctl enable oc4j

srvctl start oc4j

Invoked netca from 11gR2 Grid Infrastructure Home to reconfigure the listener “LISTENER”

/u01/app/oracle/grid11201/bin/netca

Remove default listener and add new one.

Verified that the status of the installation using below set of commands.

crsctl check cluster –all

crs_stat –t –v

crsctl check ctss

The GSD and OC4J resources are by default disabled. Enabled GSD them as below.

srvctl enable nodeapps –g

srvctl start nodeapps –n node1

srvctl start nodeapps –n node2

srvctl start nodeapps –n node3

srvctl enable oc4j

srvctl start oc4j

Invoked netca from 11gR2 Grid Infrastructure Home to reconfigure the listener “LISTENER”

/u01/app/oracle/grid11201/bin/netca

Remove default listener and add new one.

Move 10gR2 ASM Diskgroups to 11gR2 Grid Infrastructure:

Invoked the asmca from the 11gR2 Grid Infrastructure HOME (/u01/app/grid11201).

· Mount the FRA and DATA diskgroup using ASMCA. This way, asmca registered and moved the DATA and FRA diskgroups to the 11gR2 GI.

/u01/app/grid11201/bin/asmca

Mount all the disks

While Starting the 10gR2 RAC database, the below error was received. This is because, as per Oracle, 11gR2 has dynamic configuration of cluster and 11gR1 or older releases have static configuration. So, in order to run older version databases in 11gR2, the cluster configuration needs to be made persistent by pinning the nodes.

[oracle@node1 ~]$ which sqlplus

/u01/app/oracle/db/bin/sqlplus

[oracle@node1 ~]$ sqlplus / as sysdba

SQL*Plus: Release 10.2.0.3.0 - Production on Thu Oct 20 01:39:58 2011

Copyright (c) 1982, 2006, Oracle. All Rights Reserved.

Connected to an idle instance.

SQL> startup

ORA-01078: failure in processing system parameters

ORA-01565: error in identifying file '+DATA/labdb/spfilelabdb.ora'

ORA-17503: ksfdopn:2 Failed to open file +DATA/labdb/spfilelabdb.ora

ORA-15077: could not locate ASM instance serving a required diskgroup

ORA-29701: unable to connect to Cluster Manager

SQL>

/u01/app/grid11201/bin/crsctl pin css –n node1 node2 node3

The labdb database was then started using sqlplus from 10gR2 home after pinning the node. Then I had registered labdb to 11gR2 Grid using the 10gR2 srvctl (/u01/app/oracle/db/bin/srvctl and ORACLE_HOME set to 10gR2) . The srvctl to manage 10gR2 RAC database needs to be invoked from 10gR2 home itself until it is upgraded to 11R2 RAC.

Upgrade 10gR2 RAC Database to 11gR2 RAC:

• Install the 11gR2 RAC database software

• Move the Listener LAB_LISTENER to 11gR2 RAC Home.

• Start Manual Process to upgrade the original labdb database from 10gR2 RAC to 11gR2 RAC.

Start the runInstaller from 11gR2 Real Application Cluster (RAC) Software Location:

/home/oracle/db11201/database/runInstaller

Real Application Cluster installation process:

Configure Security Updates:

Email:

Ignore the “Connection Failed” alert.

Installation Option:

Install database software only

Node Selection:

Select All the Nodes (node1,node2 and node3)

Product Language:

English

Database Edition:

Enterprise Edition

Installation Location:

Oracle Base: /u01/app/oracle

Software Location: /u01/app/oracle/db11201

Operating System Groups:

Database Administrator (OSDBA) Group: dba

Database Operator (OSOPER) Group: oinstall

Summary Screen:

Verified the information here and pressed “Finish” to start installation.

At the End of the installation, the below scripts needs to be executed on all the nodes as root user.

/u01/app/oracle/db11201/root.sh

Move the Listener “LAB_LISTENER” from 10gR2 RAC DB Home to 11gR2 RAC database Home:

Invoked the netca from 11gR2 RAC home (/u01/app/oracle/db11201) and added the listener LAB_LISTENER (port TCP:1530) to newly installed 11gR2 RAC home.

Copy the tnsnames.ora files from 10gR2 DB home to 11gR2 RAC home on all the nodes.

ssh node1 cp /u01/app/oracle/db/network/admin/tnsnames.ora /u01/app/oracle/db11201/network/admin/tnsnames.ora

ssh node2 cp /u01/app/oracle/db/network/admin/tnsnames.ora /u01/app/oracle/db11201/network/admin/tnsnames.ora

ssh node3 cp /u01/app/oracle/db/network/admin/tnsnames.ora /u01/app/oracle/db11201/network/admin/tnsnames.ora

Upgrade the Database labdb Manually:

This Metalink Note (ID 837570.1) was followed for manually upgrading database from 10gR2 RAC to 11gR2 RAC.

• Ran the /u01/app/oracle/db11201/rdbms/admin/utlu112i.sql

• Fixed the warning returned from above script regarding absolute parameter

• Ignored the warnings regarding TIMEZONE as no action is needed for timezone file version if DB is 10.2.0.2 or higher.

• Ignored the warning regarding stale statistics and EM.

• Purged the recycle bin using PURGE DBA_RECYCLEBIN

• Copied the instance specific init files and password files from 10gR2 HOME to 11gR2 RAC Home.

• Set the cluster_database to false

• Stopped the database labdb. S

• Set the 11gR2 RAC HOME

• Updated the /etc/oratab file and modify the labdb HOME to 11gR2 HOME

• Started the instance labdb1 on node1 using UPGRADE option of start command.

• Ran the catupgrd.sql from $ORACLE_HOME/rdbms/admin of 11gR2.

sqlplus “/as sysdba”

SQL>spool upgrade_info.log

SQL>@/11g_oracle_home/rdbms/admin/utlu111i.sql

1.3 Check the output of the Pre-Upgrade Information Tool in upgrade_info.logand fix any issues

Obsolete/Deprecated Parameters: [Update Oracle Database 11.1 init.ora or spfile]

**************************************************

–> “background_dump_dest” replaced by “diagnostic_dest”

–> “user_dump_dest” replaced by “diagnostic_dest”

–> “core_dump_dest” replaced by “diagnostic_dest”

To fix this obsolete parameter, comment out from initialization parameter file and replace with new parameter like comment above three deprecated parameters and add *.diagnostic_dest

WARNING: –> Database is using an old timezone file version.

…. Patch the 10.2.0.1.0 database to timezone file version 4

…. BEFORE upgrading the database. Re-run utlu111i.sql after

…. patching the database to record the new timezone file version.

To find time zone file version on source database (10g) run

SQL> select * from v$timezone_file;

If time zone file version is less than 4 then apply time zone patch 5632264 manually for 10.2.0.1 database (more on How to manually upgrade time zone file version on database coming soon … )

1.4 Check invalid objects

SQL> select object_name, owner, object_type from all_objects where status like ‘INVALID’;

1.5 Check version & status of all database components

SQL>select comp_name,version, status from dba_registry;1.6 Take backup of source database i.e. 10g database

1.7 If you are using spfile, create pfile

SQL> create pfile from spfile ;

This will create pfile in 10g $ORACLE_HOME/dbs/init[SID].ora

1.8 Copy initialization file (pfile) from source (10g) to target (11g)

1.9 Adjust initialization parameter specific to 11g like

a) Remove *.background_dump_dest, *.core_dump_dest, *.user_dump_dest and add

*.diagnostic_dest=’/11g_base’ (11g Base Directory)

b) Change

*.compatible=’10.2.0.1.0′

to

*.compatible=’11.1.0′

.

.

2. Upgrade Database

2.1 Shut down source database (10g) - Your downtime starts here

sqlplus “/as sysdba”

SQL>shutdown immediate

2.2 Set your environment variables to Oracle Database 11g Release 1 (11.1) :

export ORACLE_HOME=/u01/oracle/11gbase/11.1.0

export ORACLE_SID=TEST

export PATH=$ORACLE_HOME/bin:$PATH

2.3 Start Upgrade

sqlplus “/as sysdba”

SQL> startup upgrade

Check shared_pool & java_pool size, to set new values

SQL>alter system set java_pool_size=512M;

SQL>alter system set shared_pool_size=800M;

SQL> spool upgrade.log

SQL> @?/rdbms/admin/catupgrd.sql (Upgrade process shut down database after catupgrd.sql)Check alert log in 11g at

/11gBase_Install/diag/rdbms/[sid]/[SID]/trace/alert_[SID].log

• Started the database instance on node1.

• Updated the cluster_database to true in spfile• Re-started the database instance on all the nodes.

Post Upgrade Steps:

• Ran the utlu112s.sql to get the status of the upgrade of each component.

• Ran the catuppst.sql to perform upgrade actions that do not require database in UPGRADE mode.

• Ran the utlrp.sql to recompile any INVALID objectsSQL> select count(*) from dba_objects where status like ‘INVALID’;

.3. Post Upgrade steps

3.1 Check status of database components

SQL>select comp_name,version, status from dba_registry;

• Upgraded the database configuration from 10gR2 to 11gR2 into the OCR by removing the labdb database and adding it back

Srvctl remove database –d CPPRDN

Grid home bin crsctl remove resource

Set new 11g environment to add database

Srvctl status database –d CPPRDNDN

• Added the service oltp to the 11gR2 RAC database.

Final CRS Stack after the upgrade:

Rebooted all the 3 RAC nodes and verified that all the resources come up without any issues/errors.

• Relink rman to the tape: run script /usr/openv/netbackup/bin/oracle_link

• Move scripts to new oracle home and modify cron accordingly.

• Make sure scan can be nslookup by application server. If not check resolv.conf and nsswitch.conf file on the application server.

Oracle Real Application Cluster [10g/11 g R2 RAC]

ReplyDeletewww.21cssindia.com/courses_view.html?id=1

Oracle-Application Online Training, Oracle-Application training, Oracle-Application course contents, Oracle-Application , call us: +919000444287 ...

Upgrade document is very Good.

ReplyDelete